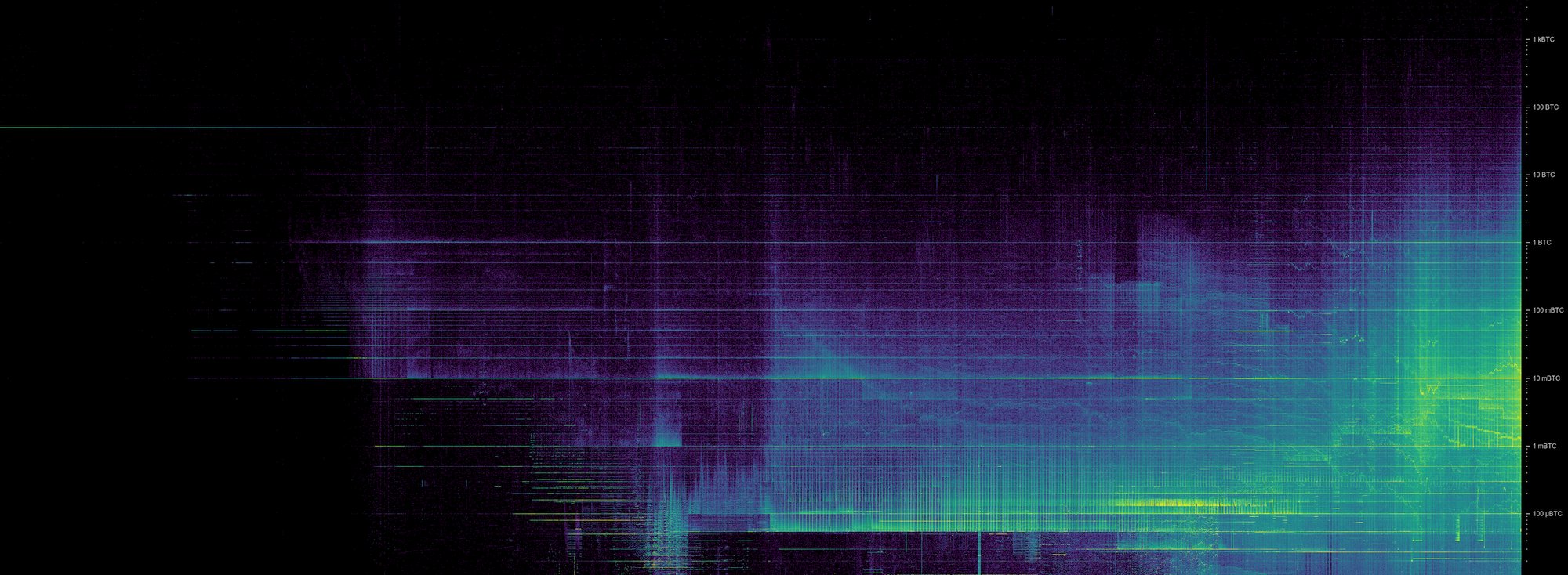

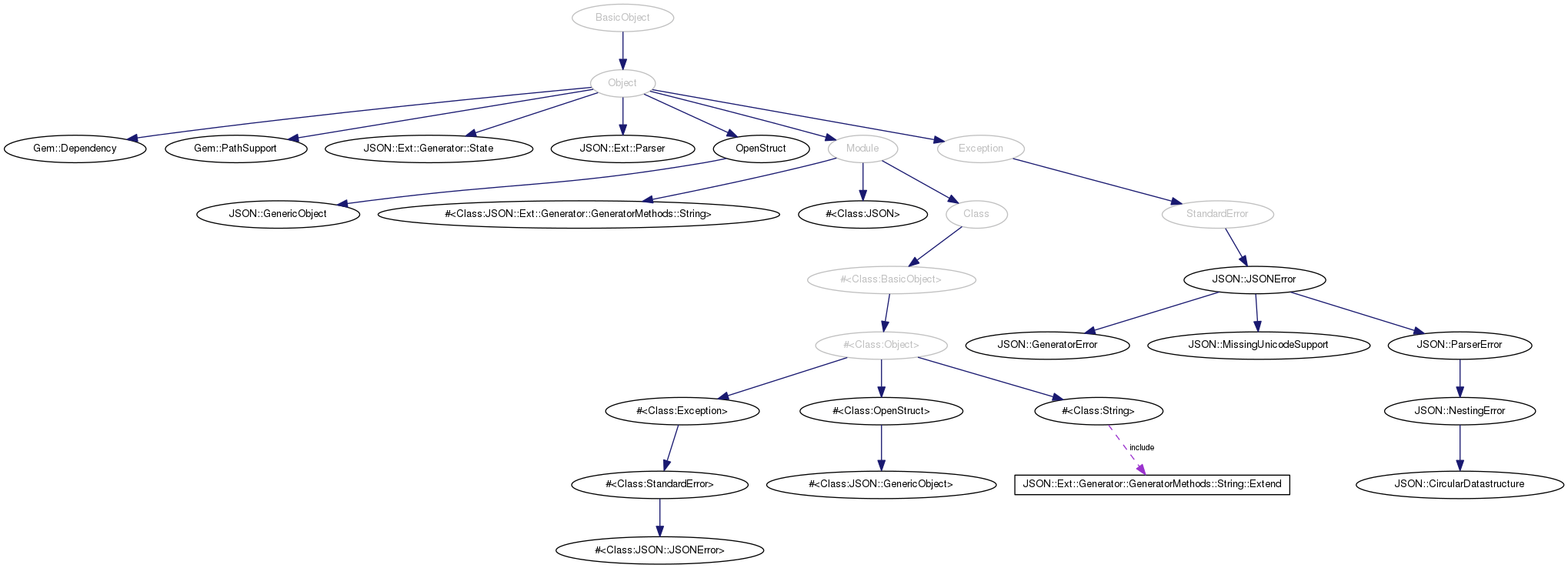

Comprehensive C++ Hashmap Benchmarks 2022

Where I've spent way too much time creating benchmarks of C++ hashmaps

It’s been over 3 years since I’ve spent considerable time finding the best C++ hashmap. After several requests I finally gave in and redid the benchmark with state of C++ hashmaps as of August 2022. This took much more work than I initially anticipated, mostly due to the fact that benchmarks take a looong time, and writing everything up and creating a representation that is actually useful takes even more time. Thanks everyone who

annoyingly kept asking me for updates  [Read More]

[Read More]